A ripple of excitement pulsed through the crowd. Paul Ford is blogging regularly again.

Until now, the Intelligence Trainer was strictly per-feed. Train a title, author, or tag on one site and it only affected that site. If you wanted to hide a topic everywhere, you had to repeat that training on each feed. With a few feeds, that’s fine. With a hundred, it’s tedious. With five hundred, it’s a non-starter.

If you’re a Premium Archive subscriber, you can now set any classifier to apply globally across all your feeds, or scoped to a specific folder. Train “sponsored” as a dislike once, and it hides sponsored stories everywhere. Train “kubernetes” as a like in your Tech folder, and it highlights kubernetes stories across every feed in that folder without touching the rest of your subscriptions.

Three scope levels

Every classifier pill in the Intelligence Trainer now shows three small scope icons on the left: a feed icon, a folder icon, and a globe icon.

![]()

- Per Site (feed icon) — The default. The classifier only applies to the feed you’re training. This is how classifiers have always worked.

- Per Folder (folder icon) — The classifier applies to every feed in the same folder. If you later move the feed to a different folder, the classifier stays tied to the original folder.

- Global (globe icon) — The classifier applies to every feed you subscribe to.

Click any scope icon to switch. The active scope is highlighted, and a tooltip explains each level. Your choice is saved with the classifier.

Real-world examples

Hide a topic everywhere. Subscribe to lots of news feeds but never want to read about a recurring topic? Open the trainer on any feed, add the topic as a text or title classifier, thumbs-down it, and click the globe icon. Done — it’s hidden across all your feeds.

Focus on a topic within a folder. Have a “Tech” folder with 40 feeds? Train “machine learning” as a like with the folder scope, and every feed in that folder will surface machine learning stories in your Focus view. Your cooking and sports feeds stay untouched.

Dislike a prolific author. Some authors are syndicated across multiple sites. Instead of training the same author name on each feed, set it to global and it applies everywhere at once.

Manage Training scope filter

The Manage Training tab now includes a scope filter alongside the existing sentiment, type, and search filters. You can quickly see all your global classifiers, all your folder-scoped classifiers, or narrow down to just per-site training.

Each classifier pill in the Manage Training list also shows a small colored scope badge, so you can tell at a glance whether a classifier is site-level, folder-level, or global.

How scoping works under the hood

When NewsBlur scores a story, it checks all classifiers that apply to that story’s feed — including any folder-scoped classifiers for the feed’s folder and any global classifiers. The same “green always wins” rule applies: if a story matches both a liked global classifier and a disliked per-site classifier, the story is marked as Focus.

Scope controls work with all classifier types: titles, authors, tags, text, and URLs. They also work with regex classifiers.

Subscription tiers

| Feature | Tier Required |

|---|---|

| Per-site classifiers (default) | Free |

| Global and folder-scoped classifiers | Premium Archive |

| Manage Training scope filter | Premium Archive |

Global and folder-scoped classifiers are available now on the web. If you have feedback or ideas for improvements, please share them on the NewsBlur forum.

Remote AI Control

Answer permissions, approve plans, and respond to questions—all from your phone. Claude Code runs natively on Mac, Windows, or Linux—exactly as intended.

$ npm install -g crabigator

1 Install via npm

2 Run crabigator instead of claude

3 Click pairing link in terminal

crabigator — ~/projects/api

crabigator

Starting Claude Code session...

Connected to <a href="http://drinkcrabigator.com" rel="nofollow">drinkcrabigator.com</a>

╭─ Claude is thinking

│ Analyzing codebase structure

│ Reading src/app.rs

❯ crabigator

Starting Claude Code session...

✓ Streaming

╭─ Claude is thinking

│ Analyzing codebase structure

│ Reading src/app.rs

Get updates on new features and mobile app launches.

Multi-device

Check in from phone or desktop

No Tmux. No Tailscale. No Termius. Native integration built for Claude Code. Pair any device in seconds—monitor progress and respond to prompts on the go.

Thinking Permission Question Complete

Real-time state updates across all sessions

Reading config.ts...

Analyzing function signatures

Allow: npm install

[Allow] [Deny]

Refactored auth module

Added unit tests

drinkcrabigator.com/dashboard

╭─ Claude is thinking...

│ Reading config.ts

│ Analyzing function signatures

Claude wants to: npm install lodash

[Allow] [Deny]

Claude wants to run: pnpm install lodash

Interactive

Respond from anywhere

Answer permissions, review plans, and respond to questions—all from your phone or any browser. Add custom instructions before approving to guide Claude's next steps.

- Permission prompts with one-tap approve or deny

- Add instructions before approving—guide Claude's approach

- Answer questions when Claude needs clarification

- Review plans before Claude starts implementing

Session Statistics

Real-time metrics that update as Claude works. Track prompts, completions, tool calls, and session duration at a glance.

Git Changes

See every file Claude modifies with visual diff bars. Additions in green, deletions in red—know exactly what's changing.

Cloud Dashboard

View sessions and respond to prompts from any browser. No VPN needed.

Mobile Access

Approve permissions and answer questions from your phone.

Claude Code

First-class support with deep hook integration.

Codex CLI

Works seamlessly with OpenAI's Codex CLI.

Semantic Diff Parsing

Changes grouped by language with function and method names extracted. See exactly which functions are being modified, not just file names.

Clickable File Links

Every file path is a link. Click to open directly in VS Code, Cursor, Zed, or your preferred editor.

CLI Inspection

Use crabigator inspect to view running instances. Perfect for automation.

Native Scrollback

Uses your terminal's primary buffer—unlike tmux. Scroll naturally.

Mouse Selection

Select and copy text naturally—no tmux capture mode.

Instant Pairing

Scan a QR code or enter a short code. Connected in seconds.

Free

$0/month

- Unlimited Claude Code sessions

- Answer permissions & questions

- Unlimited web and mobile access

- Real-time sync

- 10 min/day remote access

Pro

$3/month

- Unlimited Claude Code sessions

- Answer permissions & questions

- Unlimited web and mobile access

- Real-time sync

- Unlimited remote access

Terminal

$ npm i -g crabigator

2

Run crabigator instead of claude

3

Click the pairing link to connect your phone

Origin

Why "Crabigator"?

+

+

+

Alligator Claude at Cal Academy

The Claude Navigator

Permission Required

Claude wants to run: git commit

Thinking...

~/projects/app

❯ Analyzing codebase...

src/main.rs modified

+42 -12

3Sessions

47Prompts

2.1hTime

Coming Soon

Native mobile apps

Push notifications Know instantly when Claude needs your approval

Native performance Smooth 60fps animations and instant response

Offline support Review sessions even without internet

Get notified about product launches and updates.

drinkcrabigator.com

Some feeds I want to read every single story. Others I’m happy to skim once a week. And a few high-volume feeds I only check occasionally, so stories older than a day or two aren’t worth catching up on. Premium Archive subscribers get the site-wide “days of unread” setting, but it was too blunt, applying the same rule to everything. Now you can set how long stories stay unread on a per-feed and per-folder basis.

How it works

Open the feed options popover (click the gear icon in the feed header) and you’ll see a new “Auto Mark as Read” section. Choose how many days stories should remain unread before NewsBlur automatically marks them as read:

The slider goes from 1 day to 365 days, with a “never” zone at the far right for feeds where you truly want to read every story regardless of age. Choose “Default” to inherit from the parent folder or site-wide setting, “Days” to set a specific duration, or “Never” to disable auto-marking entirely.

Folder inheritance

Settings cascade down from folders to feeds. Set a folder to 7 days, and all feeds inside inherit that setting unless they have their own override. This is perfect for organizing feeds by how aggressively you want to age them out:

- Must Read folder: Set to “Never” so nothing ages out

- News folder: Set to 2 days since news gets stale fast

- Blogs folder: Set to 30 days for long-form content worth revisiting

- Individual feeds can still override their folder’s setting

The status text below the slider shows where the current setting comes from: the site-wide preference, a parent folder, or an explicit setting on this feed.

Site settings dialog

You can also configure auto-mark-read from the site settings dialog (right-click a feed and choose “Site settings”). The same controls are available there, redesigned to match the popover style.

Availability

Per-feed and per-folder auto-mark-as-read settings are a Premium Archive feature, available now on the web. They work alongside the existing site-wide “days of unread” preference in Manage → Preferences → General → Days of unreads, which is also a Premium Archive feature.

If you have feedback or ideas for improvements, please share them on the NewsBlur forum.

The Intelligence Trainer is one of NewsBlur’s most powerful features. It lets you train on authors, tags, titles, and text to automatically sort stories into Focus, Unread, or Hidden. But until now, there were limits—you couldn’t train on URLs, regex support was something power users had been requesting for years, and managing hundreds of classifiers meant clicking through feeds one by one.

Today I’m launching three major improvements: URL classifiers, regex mode for power users, and a completely redesigned Manage Training tab.

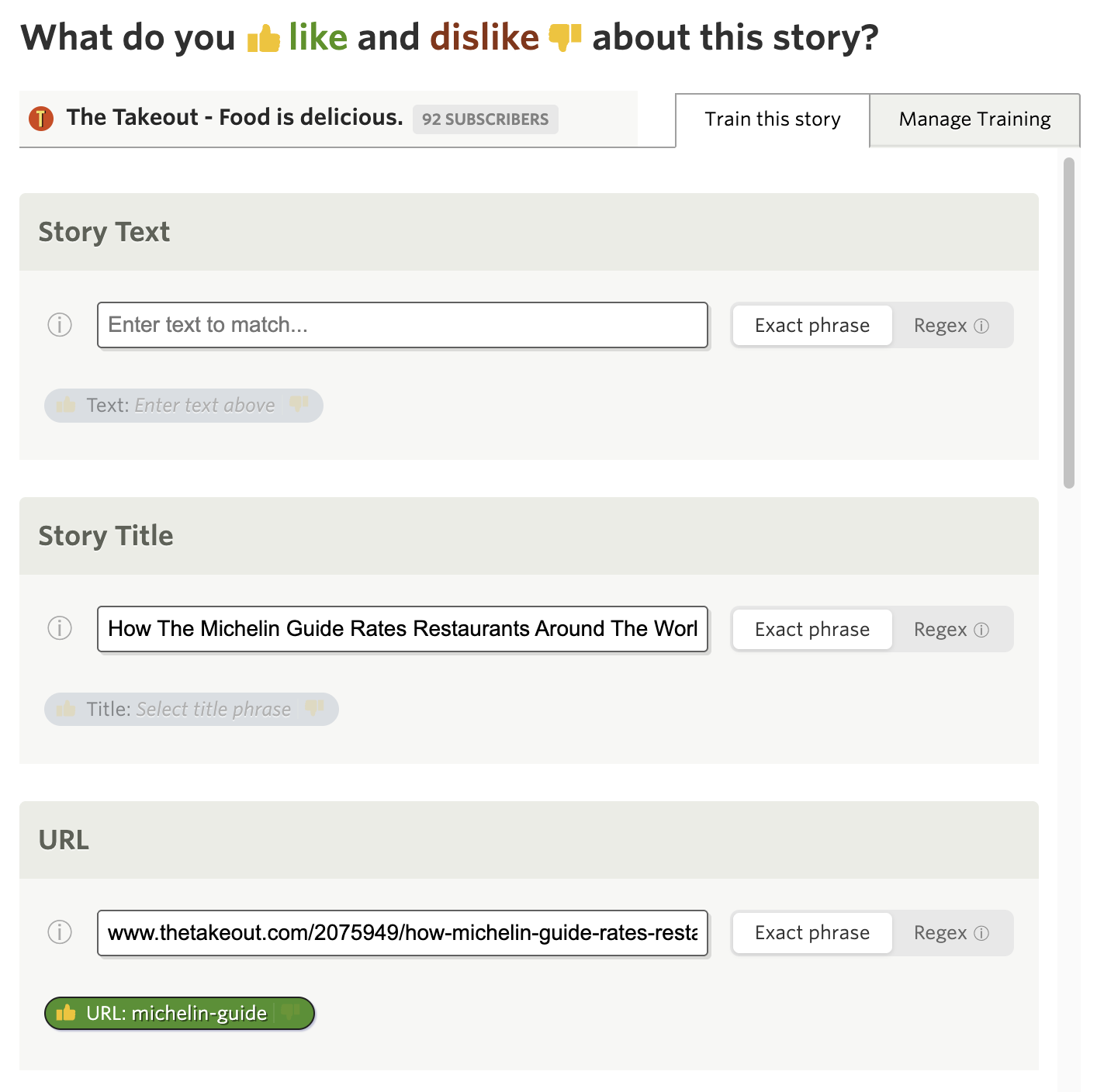

Train on URLs

You can now train on story permalink URLs, not just titles and content. This opens up new filtering possibilities based on URL patterns.

The URL classifier matches against the full story permalink. Some use cases:

- Filter by URL path: Like or dislike stories that contain

/sponsored/or/opinion/in their URL - Domain sections: Match specific subdomains or URL segments that indicate content types

- Landing pages vs articles: Some feeds include both—filter by URL structure to show only what you want

URL classifiers support both exact phrase matching and regex mode. The exact phrase match is available to Premium subscribers, while regex mode requires Premium Pro.

When a URL classifier matches, you’ll see the matched portion highlighted directly in the story header, so you always know why a story was filtered.

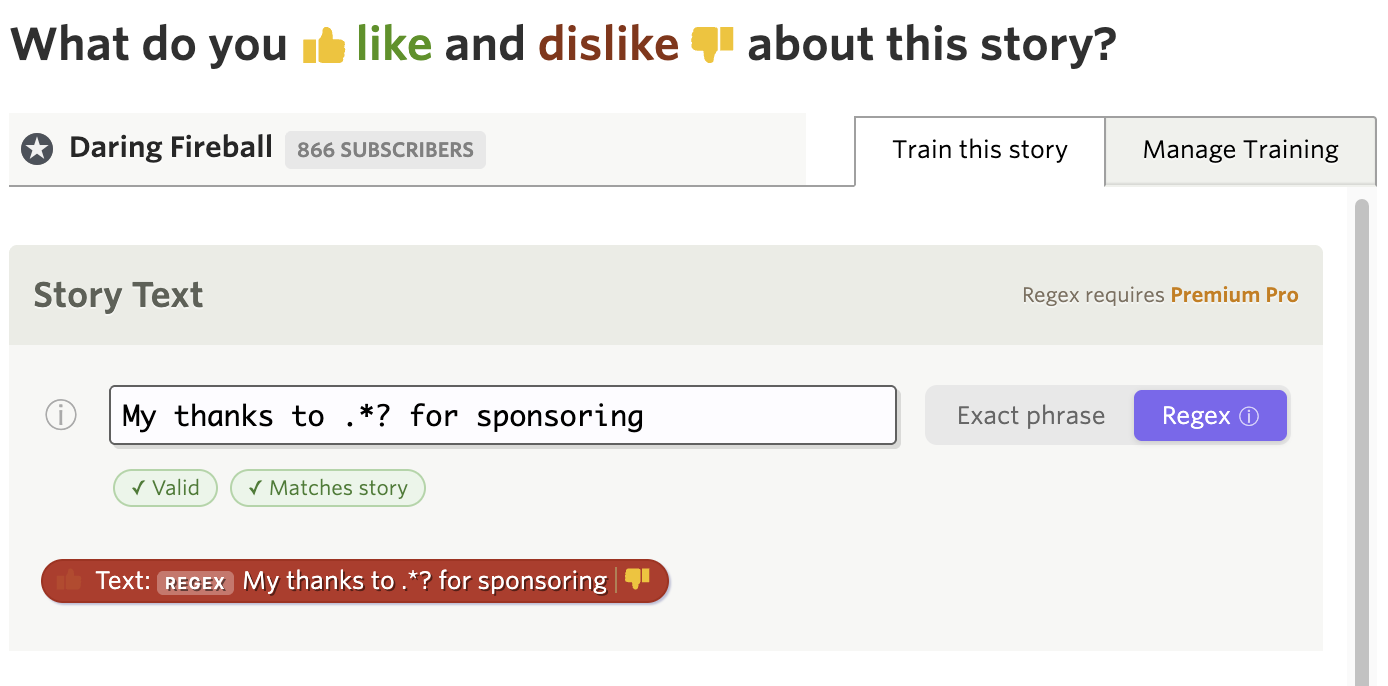

Regex matching for power users

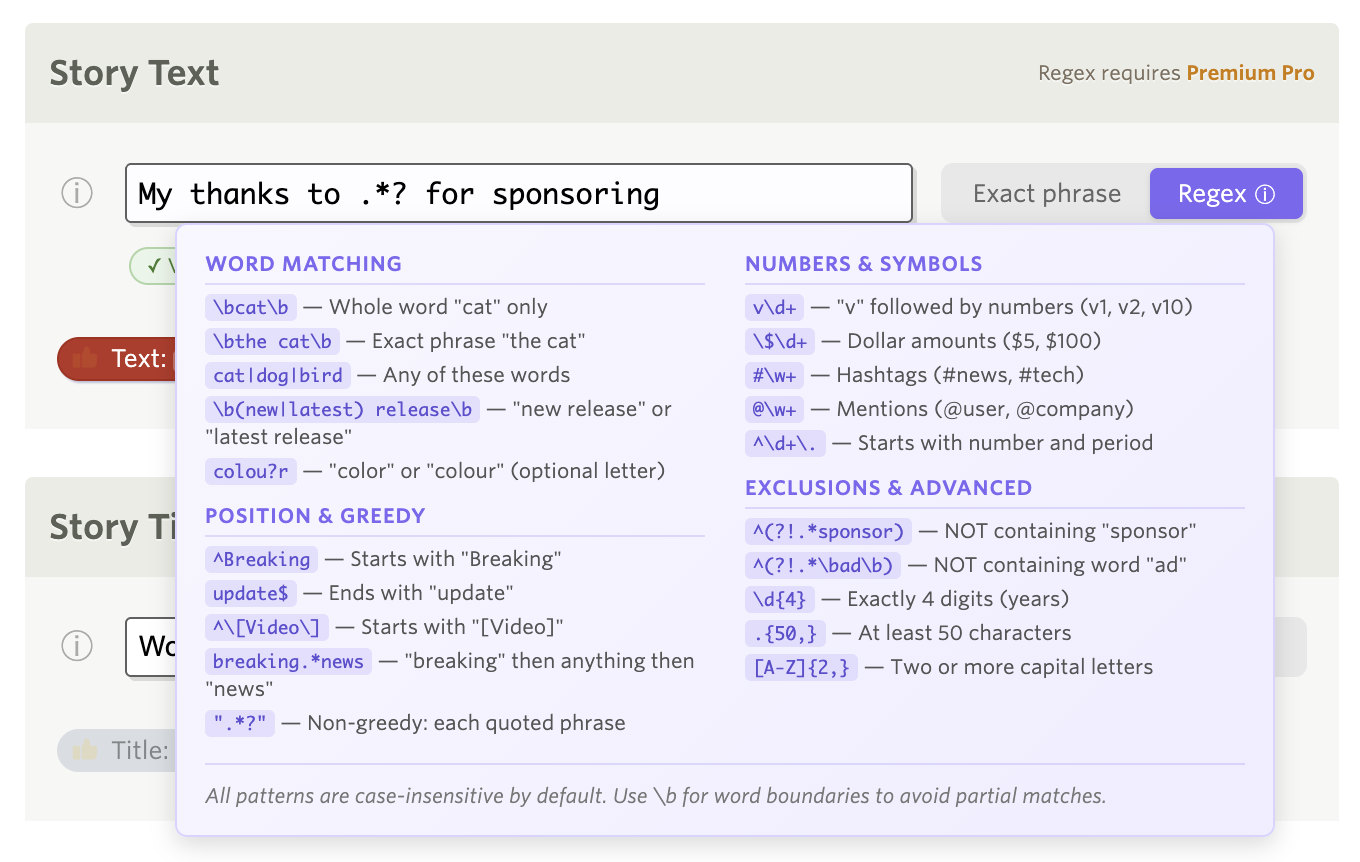

For years, the text classifier only supported exact phrase matching. If you wanted to match “iPhone” and “iPad” you needed two separate classifiers. Now you can use regex patterns in the Title, Text, and URL classifiers.

A segmented control lets you switch between “Exact phrase” and “Regex” mode. In regex mode, you get access to the full power of regular expressions:

- Word boundaries (

\b): Match\bapple\bto find “apple” but not “pineapple” - Alternation (

|): MatchiPhone|iPad|Macin a single classifier - Optional characters (

?): Matchcolou?rto find both “color” and “colour” - Anchors (

^and$): Match patterns at the start or end of text - Character classes: Match

[0-9]+for any number sequence

A built-in help popover explains regex syntax with practical examples. The trainer validates your regex in real-time and shows helpful error messages if the pattern is invalid.

Regex matching is case-insensitive, so apple matches “Apple”, “APPLE”, and “apple”. This mode is available to Premium Pro subscribers.

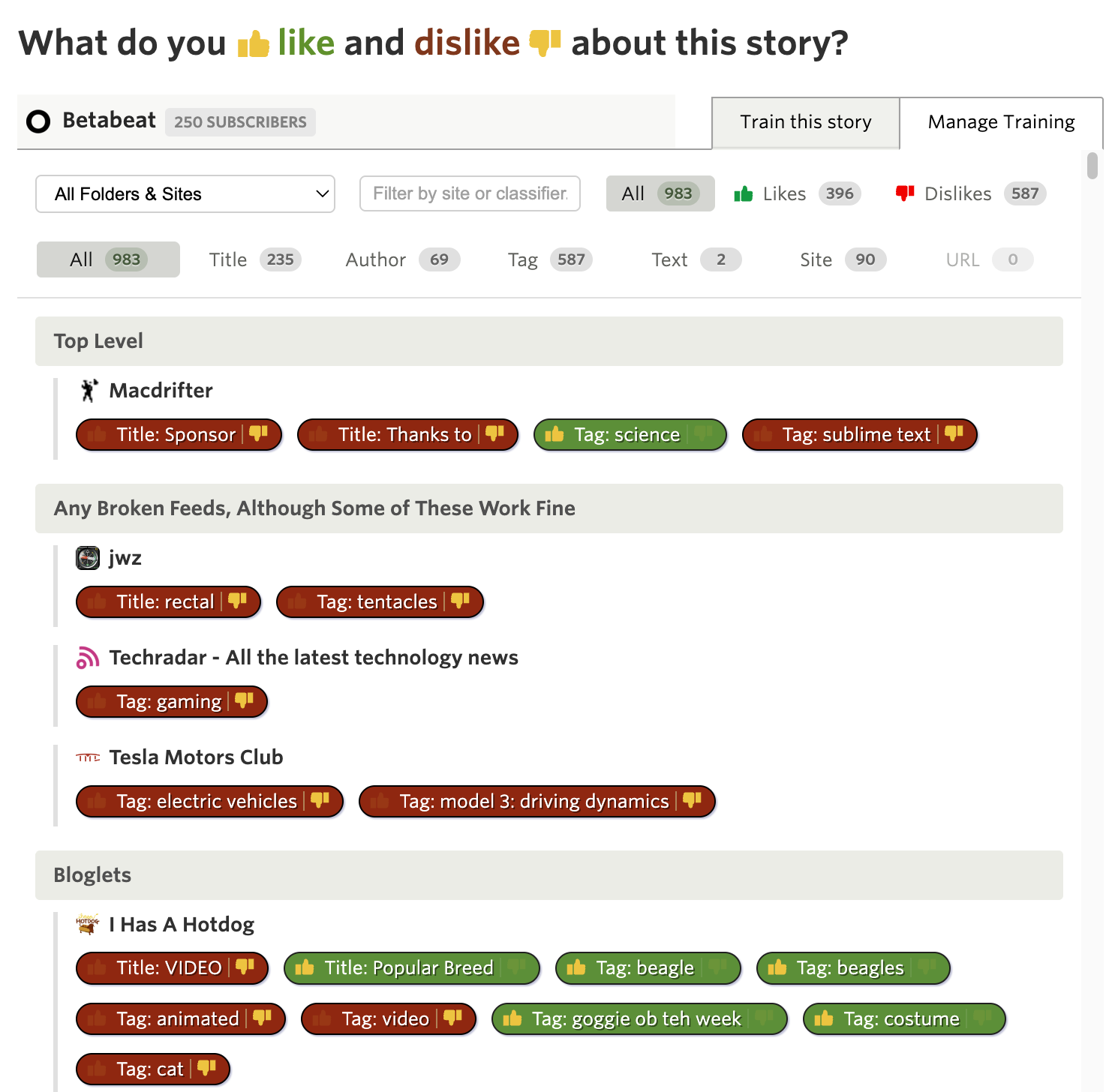

Manage all your training in one place

Over the years you may have trained NewsBlur on hundreds of authors, tags, and titles across dozens of feeds. But when you wanted to review what you’d trained, you had to open each feed’s trainer individually and click through them one by one.

The new Manage Training tab provides a consolidated view of every classifier you’ve ever trained, organized by folder. You can see everything at a glance, edit inline, and save changes across multiple feeds in a single click.

Open the Intelligence Trainer from the sidebar menu (or press the t key). You’ll now see two tabs at the top: “Site by Site” and “Manage Training”. The Manage Training tab is available everywhere you train—from the story trainer, feed trainer, or the main Intelligence Trainer dialog.

The Site by Site tab is the existing trainer you know—it walks you through each feed showing authors, tags, and titles you can train. That’s still the best way to train new feeds with lots of suggestions.

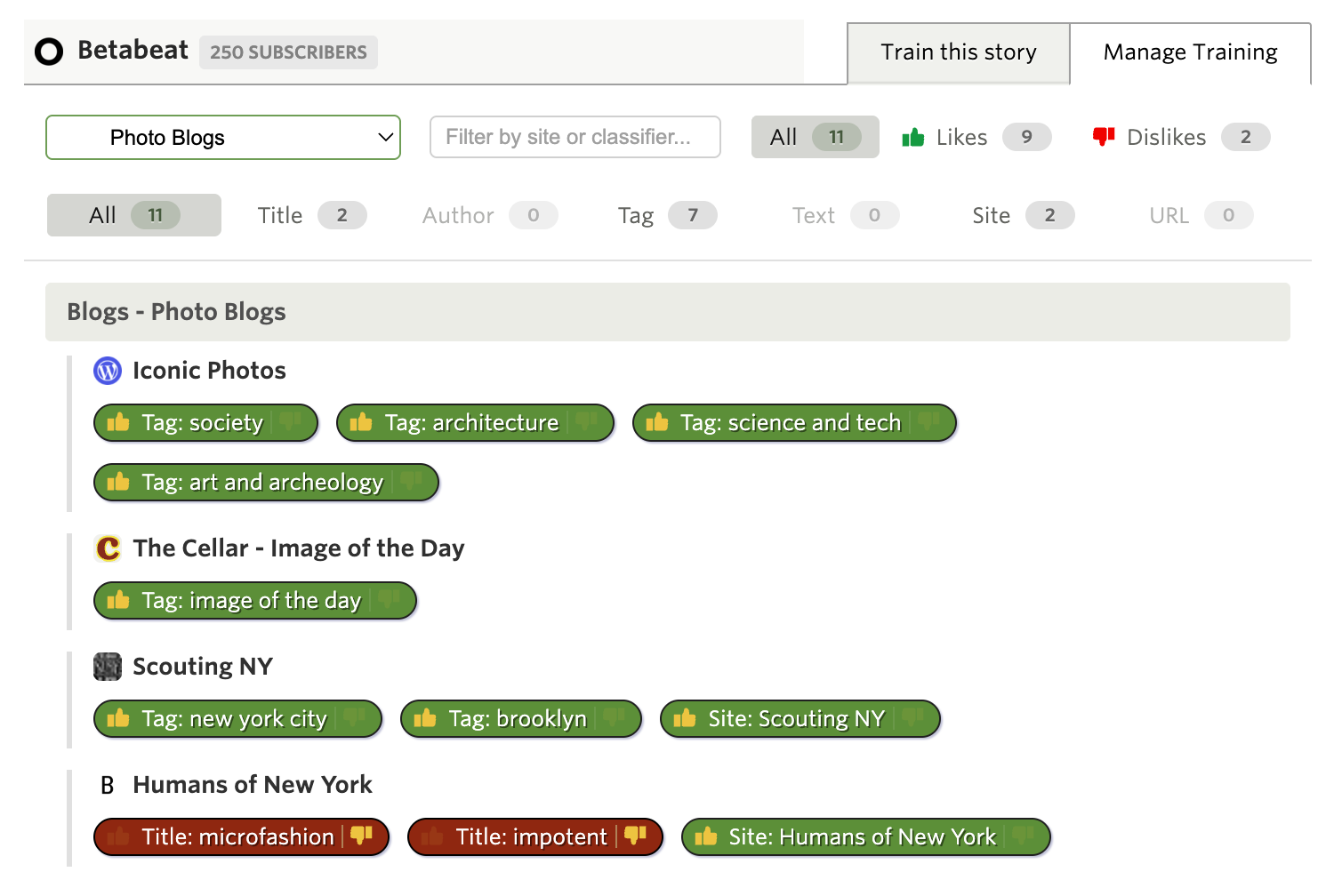

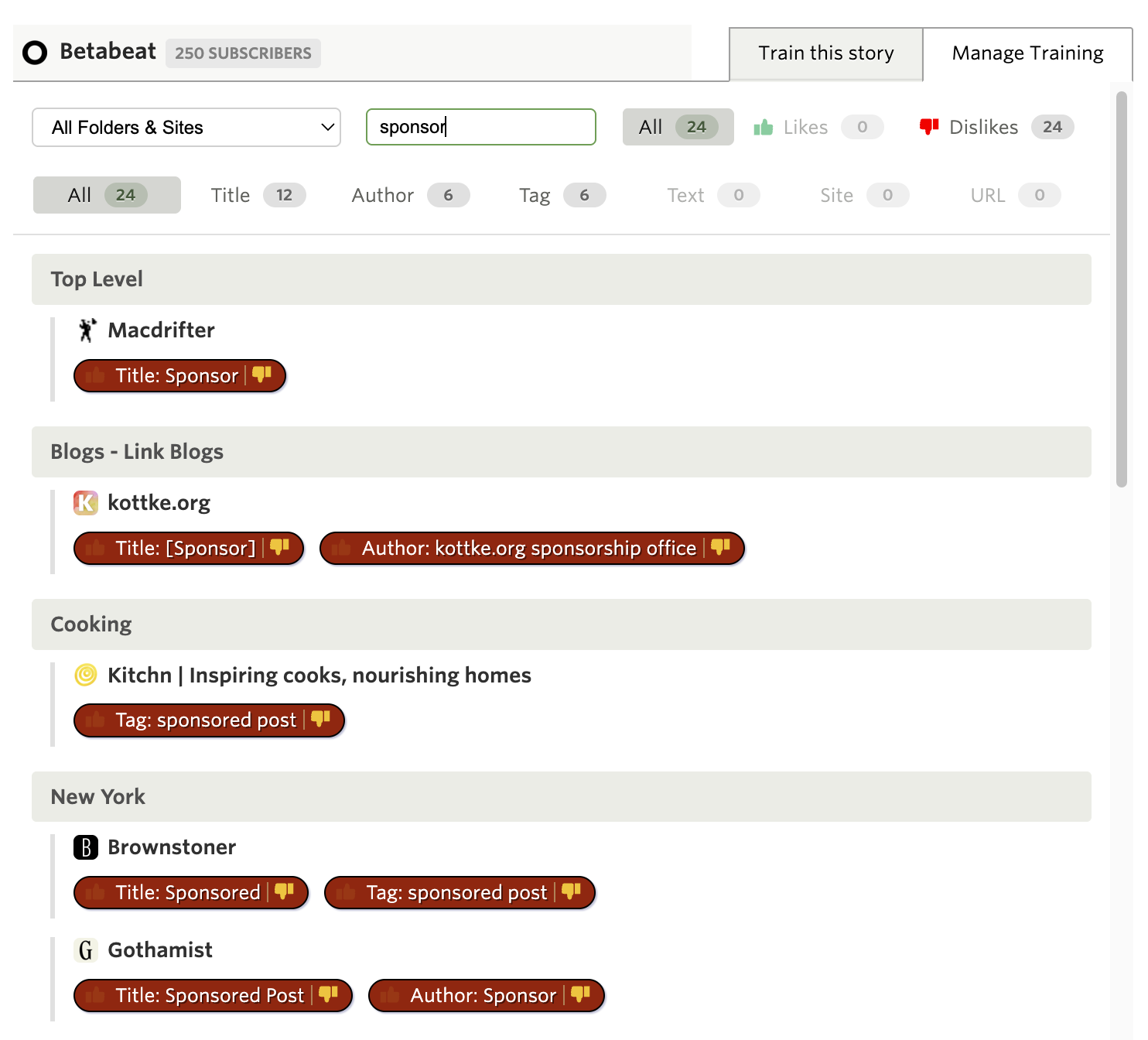

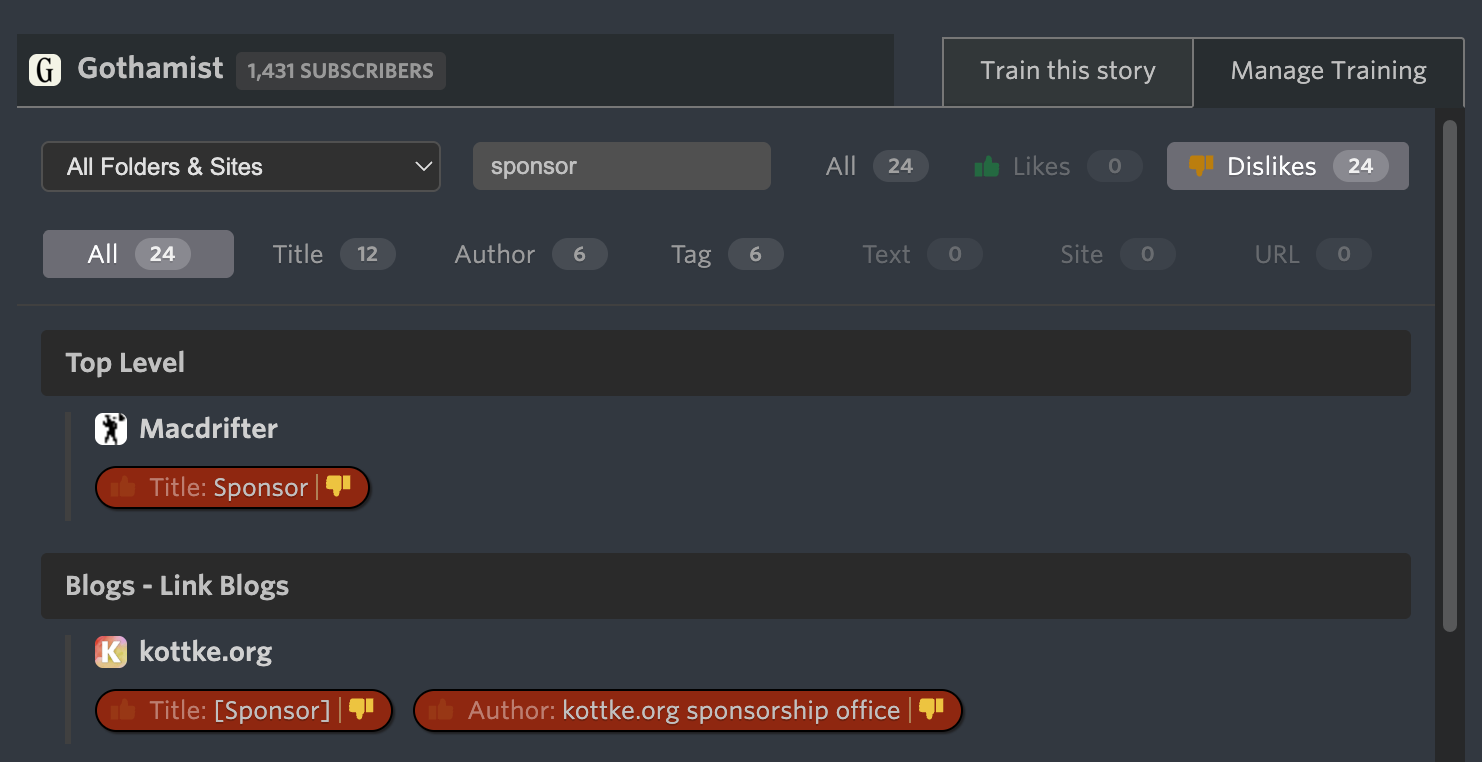

The Manage Training tab shows only what you’ve already trained. Every thumbs up and thumbs down you’ve ever given, organized by folder just like your feed list. Each feed shows its trained classifiers as pills you can click to toggle.

Filtering made easy

The real power comes from the filtering options. At the top of the tab you’ll find several ways to narrow down your training:

Folder/Site dropdown — Only folders and sites with training appear in this dropdown. Select a folder to see all training within it, or select a specific site to focus on just that feed’s classifiers. This is especially useful when you have hundreds of trained items and want to review just one area.

Instant search — Type in the search box and results filter as you type. Search matches against classifier names, feed titles, and folder names. Looking for everything you’ve trained about “apple”? Just type it and see all matches instantly.

Likes and Dislikes — Toggle between All, Likes only, or Dislikes only. Want to see everything you’ve marked as disliked? One click shows you all the red thumbs-down items across your entire training history.

Type filters — Filter by classifier type: Title, Author, Tag, Text, URL, or Site. These are multi-select, so you can show just Authors and Tags while hiding everything else. Perfect for when you want to audit just the authors you’ve trained across all your feeds.

Edit inline and save in bulk

Click any classifier pill to toggle it between like, dislike, and neutral. The Save button shows exactly how many changes you’ve made, so you always know what’s pending. Made a mistake? Just click again to undo—the count updates automatically.

When you click Save, all your changes across all feeds are saved in a single request. No more clicking through feeds one at a time to clean up old training.

Subscription tiers

| Feature | Tier Required |

|---|---|

| Title/Author/Tag/Feed classifiers | Free |

| Manage Training tab | Free |

| URL classifiers (exact phrase) | Premium |

| Text classifiers (exact phrase) | Premium Archive |

| Regex mode (Title, Text, URL) | Premium Pro |

All three features are available now on the web. If you have feedback or ideas for improvements, please share them on the NewsBlur forum.